Kinetic

Messages bouncing around

September 20, 2012

Career Advice

April 18, 2012

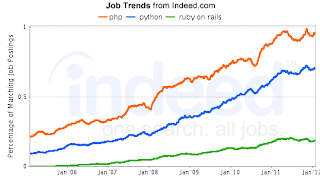

PHP : you know it's good because semicolons are required

After getting a link to a "community index" for PHP, I thought I'd check with indeed.com to see what they say about a few languages. It looks like PHP isn't dead yet.

December 13, 2011

Mobile and Web job trends

September 05, 2011

MongoDB replica sets - high level overview

Here is a very very brief overview of MongoDB replica sets and a tip to enable read access to read-only replica slaves.

http://www.codypowell.com/taods/2011/08/a-cloud-hosting-architecture-for-mongodb.html

The full definition from the MongoDB site is here http://www.mongodb.org/display/DOCS/Replica+Sets

March 29, 2011

Browser geolocation APIs

Here is some sample script showing how you could use this geolocation API in your mobile or location aware web apps.

function onLocationUpdated(position)

{

// do something useful

savePosition(position);

createCookie("s_geo","on",3600);

updateLocationDisplay(position);

}

// request location

if (navigator.geolocation && !readCookie("s_geo"))

{

navigator.geolocation.getCurrentPosition(onLocationUpdated);

var watchID = navigator.geolocation.watchPosition(

onLocationUpdated, null, {

enableHighAccuracy : true,

timeout : 30000

});

}

geonote.org - sharing the world around you

Rather than try to build up functionality and features to attract a crowd, it seemed that showing information that already exists would be a good way to bootstrap the app. Since I originally envisioned this app as something like Wikipedia for places, but more of an open medium that people can use for any purpose they can put it to, I first thought to look at ways to index Wikipedia entries by their geo location. I quickly found that other folks had already done the indexing and provided an API - geonames.org Pulling this data in was pretty easy, they have a simple HTTP API that returns XML, which geonote.org simply formats into a mobile friendly display. Once there was a web app for sharing notes and viewing 'atlas' pages (the Wikipedia entries), I went in search of other location based APIs and found several great ones.

Here's the list of geo location APIs I've used so far

- GeoNames.org (Wikipedia entries and more) - http://www.geonames.org/export/ws-overview.html

- Flickr.com (Photos) - http://www.flickr.com/services/api/

- Plancast.com (Events) - http://groups.google.com/group/plancast-api/web/overview?pli=1

- Hunch (Recommendations) - http://hunch.com/developers/v1/

- Twitter (chitter chatter) - http://apiwiki.twitter.com/Twitter-API-Documentation

The Plancast crew especially was extremely helpful. Their forum described upcoming support for searching by latitude and longitude, but it had not been released at the time. After posting a comment they were able to build and release that feature in only a few days (on a weekend too!)

One of the most intriguing APIs was the Hunch API for recommendations. Although it has a lot of power, it requires a Twitter username to provide personalized recommendations and the geonote.org app is too simple to try to do real Twitter authentication integration. I'm sure to revisit the Hunch API though.

March 13, 2011

Mobile webapps and the JQuery Mobile library

You can see the results at http://geonote.org/places/plans for a 'from scratch' look and http://m.geonote.org/places/plans for the JQuery Mobile look.

The first thing to take to heart is the spartan look of mobile web apps. There simply isn't room for multiple crowded top nav and side nav bars or for the data dense (but information poor) layouts of most sites. Take a look at a sample page from AllRecipes (which is a great site) - http://allrecipes.com/Cook/SHORECOOK/Photo.aspx?photoID=602783 - there are nav bars for site section, tabs, breadcrumbs, sub-page navigation and so on. Not to mention a right nav bar with even more links. These are all useful I'm sure, but for a mobile web app you need to start from a blank page and work you way up and consider the information value of each pixel used. (Every pixel is sacred, every pixel is great. If any pixel is wasted, Tufte gets quite irate.) Another way to think of this is to consider each link as an internal advertisement for a page the user doesn't want to visit. There is a name for unwanted links on a page put there for commerical gain and that is 'spam'. Don't let your designs become link spammy.

Next, you will want to have a way to preview your web app on a mobile device. If you have a modern phone then you can use it's browser and point it to your local dev environment, but another way is to use an iframe wrapped in a phone mockup. Here's the one I use http://geonote.org/html/iphone/ There may be better mobile browser emulators but I didn't spend much time looking for something once I had the iframe based "emulator" working.

Building pages for the 'from scratch' look follows the typical web app development path - you can use most any framework you are comfortable with, but be careful with approaches that are 'client heavy'. You'll want the smallest HTML, few images and the least number of resources downloaded for rendering each page.

Many scripting libraries have a way to package only the necessary modules into a single resource - this cuts down on the network time needed to get the page rendered. Personally, I avoid client libraries since they are mostly meant for whiz-bang interactivity and on a mobile device the interaction feels better when it is as direct as possible. Common web app performance advice applies here - caching is your friend, the network is not.

The JQuery Mobile look was the most interesting part of building the UI for this site. I was really looking forward to getting a native look and feel for free. Although the library is currently in Alpha 3 stage it's very usable and I haven't run into any bugs in my limited testing. The JQuery Mobile library changes how you think of browser based pages. Not only does it try to use Ajax for most things it also introduces "compound pages" which results in an ever-growing DOM with 'sub pages' or panels that are shown and hidden during screen navigation. This allows for JQuery to perform the animated transitions between screens that give the hip 'mobile look' which is so captivating.

The downside to using an Ajax approach is the use of local anchors (the part of a URL after the '#' character) for tracking state. While this is certanly a popular and Ajaxy way of doing things it does have it's problems. If you aren't familiar with the details it really mucks up how you work when building pages and causes things to simply not work and breaks the page (requiring the user to manually refresh the page). I still don't have forms working and had to disable the Ajax loading of some pages due to this hash-based URL trickery. You will need to rigorously test all pages and transitions between all pages to ensure that it actually works.

Another downside to using JQuery Mobile is that the user interaction is noticably slower than just a simple HTML and CSS page. It is almost not "interactive", which is not a good thing for client applications. There is a lot of promise though and I haven't even looked at the built-in capabilities of JQuery Mobile for wider screen devices like tablets.

August 15, 2010

Non-blocking operations and deferred execution with node.js

Node.js is an environment for writing Javascript based server applications with a big twist - all IO operations are non-blocking. This non-blocking aspect introduces a concurrency model that may be new to most developers but enables node.js applications to scale to a huge number of concurrent operations - it scales like crazy.

Using non-blocking operations means code that would normally wait for data from a disk file or from a network connection does not wait and waste CPU cycles - your code returns control to the runtime environment and will be called later when the data actually is available. This allows the runtime environment to execute some other code whose data is ready at the moment and gains efficiency by avoiding context switches. This also means there is a single thread accessing data and no synchronization or semaphores are needed to prevent corruption of data due to concurrent access, making your application even more efficient.

Although writing applications in Javascript makes node.js very approachable, the use of non-blocking operations isn't very common in most server applications and results in code that looks similar but is oddly different from what is familiar to most developers. For example, consider a simple program that reads data from a file and processes that data. In a typical procedural program the steps would be :

file = open("filname");

read(file,buffer);

close(file);

do_something(buffer);

This pseudo-code example is easy to understand and probably familiar to most developers. The step-by-step sequence of operations is the way most languages work and how most application logic is described. However, in a non-blocking version the open() function returns immediately - even though the file is not yet open. This introduces some challenges.

file = open("filename");

// the 'file' is not yet open! what to do?

read(file,buffer);

close(file);

do_something(buffer);

If the open() function were a blocking operation, the runtime environment would defer execution of the remaining sequence of operations until the data was available and then pick up where it left off. In node.js the way that code after a non-blocking operation is paused and picked up later is through the use of callback functions. All the steps listed after using the open() function are bundled into a new function and that bundle of steps is passed as a parameter to the open() function itself. The open() function will return immediately and your code has the choice of doing some work unrelated to the data that is not yet available or simply returning control to the runtime environment by exiting the current function.

When the data for the opened file actually does become available your callback function is invoked by the runtime and your bundle of steps will then proceed.

open("filename",function (f) {

read(f,buffer);

close(f);

do_something(buffer);

});

The parameters to the callback function are defined by the non-blocking operation. In node.js opening files uses a callback that provides an error object (in case opening the file fails) and a file descriptor that can be used to actually read data. In node.js most callback functions have an error object and a list of parameters with the desired data.

In the non-blocking example above you may have noticed the read(f,buffer) function call and guessed that this might be a non-blocking operation. This requires an additional callback function holding the remaining sequence of operations to execute once the data is read into a buffer.

open("filename",function (f) {

read(f,buffer, function(err,count) {

close(f);

do_something(buffer);

});

});

Some people feel this is a natural way to structure your code. Those people would be wrong.

Here is an actual node.js example of reading from a file

var fs=require('fs'),

sys=require('sys');

fs.open("sample.txt",'r',0666,function(err,fd) {

fs.read(fd,10000,null,'utf8',function(err,str,count) {

fs.close(fd);

sys.puts(str);

});

});

Although this may appear a bit complex for such a simple task, and you can imagine what happens with more complex application logic, the benefit of this approach becomes more apparent when thinking about more interesting situations. For example, consider reading from two files and merging the contents. Normally a program would read one file, then read another file, then merge the results. The total time taken would be the sum of the time to read each file. With non-blocking operations, reading both files can be started at the same time and the total time taken would only be the longest time to read either of the two files.

January 15, 2010

Hiring a Sr Engineer at the Rubicon Project

Hey everybody - I'm looking to hire a few engineers and thought I'd send out a note to let you all know. The Rubicon Project is truly an /awesome/ company to work for and the work we are doing is really exciting, challenging, very high scale and fun! It's like a startup - with benefits. So if you are ready to take charge of some big technology or know someone that is up to it, please shoot me an email. I've included the obligatory job description below. The position is in Seattle by the way.

Mike

Sr Software Engineer

the Rubicon Project is looking for several senior software engineers to help build out new products and features for the Data Intelligence area of our cutting edge online advertising platform. We are looking for people with experience building and operating large-scale, high-traffic Web applications and customer facing Web services. If you are an extremely productive contributor with a get-it-done attitude, work well in a highly collaborative team and want to work in an environment where software engineers are not just cubicle coders but full participants in shaping the product and the business then this job is for you. Serious experience with the following technologies is desired - Linux, Apache, HAProxy, memcached, memcacheq, Java, JSON, Tokyo Tyrant, MongoDB and MySQL.

Posted via email from Kinetic

December 24, 2009

Sunset BBQ

Posted via email from Kinetic

December 21, 2009

Evening on Maui

Posted via email from Kinetic

December 17, 2009

Holiday cookies

Posted via email from Kinetic

Algorithmic (almost) content creation

The choice quote is :

Instead of trying to raise the market value of online content to match the cost of producing it — perhaps an impossible proposition — the secret is to cut costs until they match the market value.

The costs to be cut are the costs of creation (manufacturing). The delivery costs are already nearly zero. Currently Demand Media is generating answers to unfulfilled questions using 'crowd sourcing' and blending media assets like video and photos and quickly written text. I wonder if someday even the text could be auto-generated.

I'm sure in the next six months we'll see a blooming of clones - 'DemandMedia for FooBar' style.

Quite a while ago I had thought about what it would take to build a content site with heavy automation on the gathering, review and approval of content. But I had not thought of optimizing that process based on audience demand. Quite clever really.

update

Just found this post on ReadWriteWeb from a writer that previously worked with DemandMedia - required reading to see things from the viewpoint of someone actually creating DemandMedia content.

Choice quote:

They [writers] appear to be overwhelmingly women, often with children, often English majors or journalism students, looking for a way to do what they love and make a little money at it.

Compare those demographics to Wikipedia: more than 80% male, more than 65% single, more than 85% without children, around 70% under the age of 30.